The NHTSA has now said it will investigate two separate crashes reportedly involving a Tesla electric car operating with the Autopilot feature engaged.

Media coverage on the May 7 death of a Model S driver in Florida continues to debate the merits of electronic driver-assistance technologies, often using remarkably uninformed language.

One Tesla Model S driver felt it was appropriate to offer his views, to explain to a broader audience how many Tesla drivers view their cars and the Autopilot feature.

DON'T MISS: More Tesla Autopilot crashes surface; NHTSA to investigate another

Mike Dushane has worked in the automotive industry, in various editorial and product development roles, for 20 years. He lives in the San Francisco area, and has owned a Model S since May 2015.

He tells us he's used pretty much every electronic driver-assistance aid in production. Those include the very first adaptive cruise control systems, which appeared in the U.S. on Mercedes-Benz vehicles in the late 1990s.

What follows in the balance of this article are Dushane's words, edited for clarity and style.

![2015 Tesla Model S 70D, Apr 2015 [photo: David Noland] 2015 Tesla Model S 70D, Apr 2015 [photo: David Noland]](https://images.hgmsites.net/lrg/2015-tesla-model-s-70d-apr-2015-photo-david-noland_100508389_l.jpg)

2015 Tesla Model S 70D, Apr 2015 [photo: David Noland]

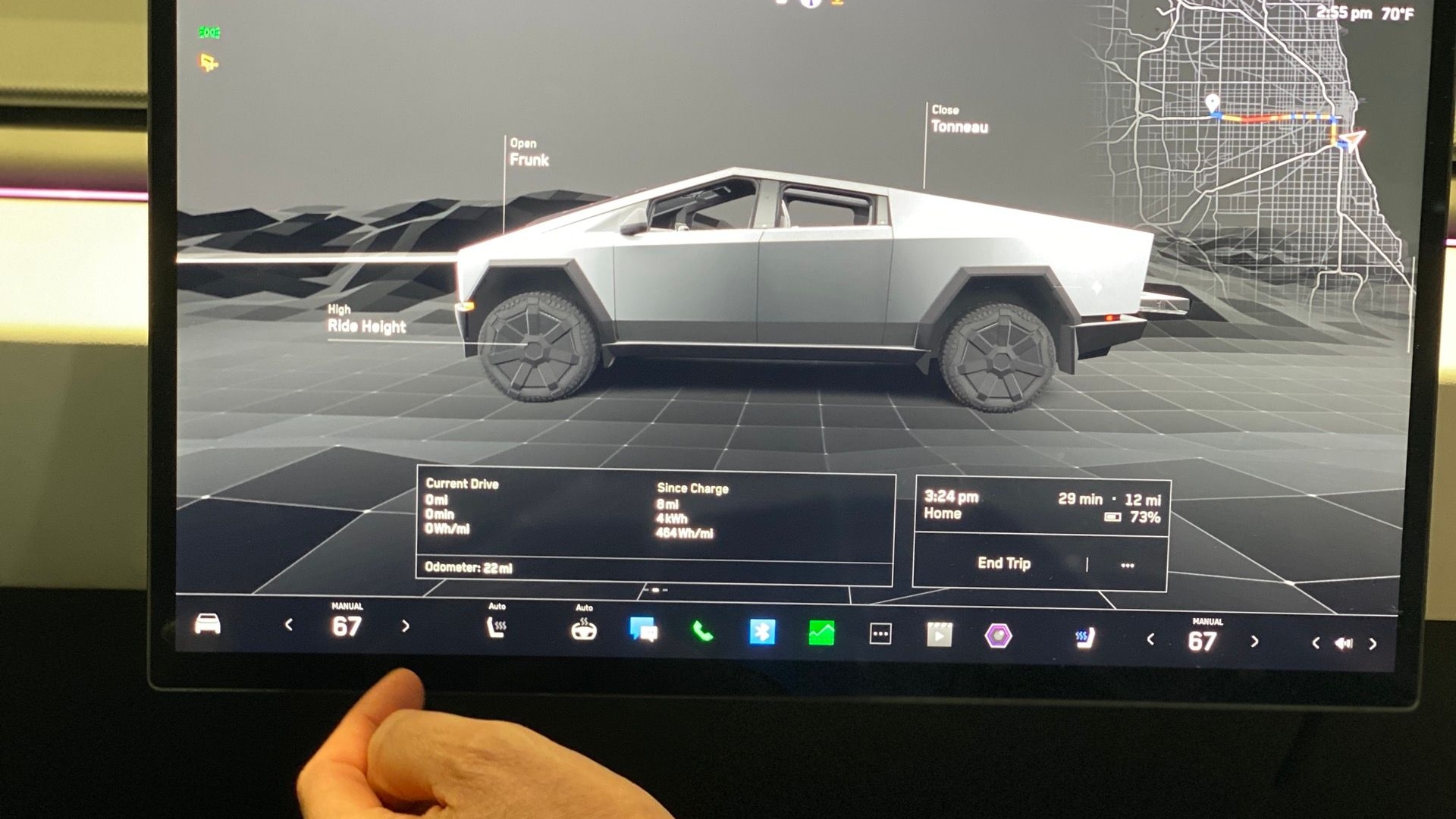

Much speculation about Tesla’s “Autopilot” has surfaced since news of a fatal accident earlier this year that involved the system. Some of that speculation discusses “autonomous cars”, which the Tesla is not.

We paid $2,500 for "Autopilot convenience features" to be enabled in our Tesla Model S, and have since driven thousands of miles using the system. So perhaps I can shed some light on what the Autopilot system does—and how owners actually use it.

When we ordered our car in early 2015, it was my expectation that Tesla would roll out a set of features that make driving safer and less stressful by steering and braking on limited-access roads in normal circumstances. Tesla met my expectations.

CHECK OUT: Let's be clear: Tesla's Autopilot is not a 'self-driving car'

I did not expect a car that could drive itself.

Tesla owners on forums and groups actively talk about the potential of the car’s systems, but I think it's accurate to say that we never expected the car to be autonomous. It just doesn't have the hardware to allow that.

There's no laser system to build a computer model of all potential threats and obstacles, just short-range proximity sensors all around, plus front-facing camera and radar systems.

Tesla Autopilot sensor system

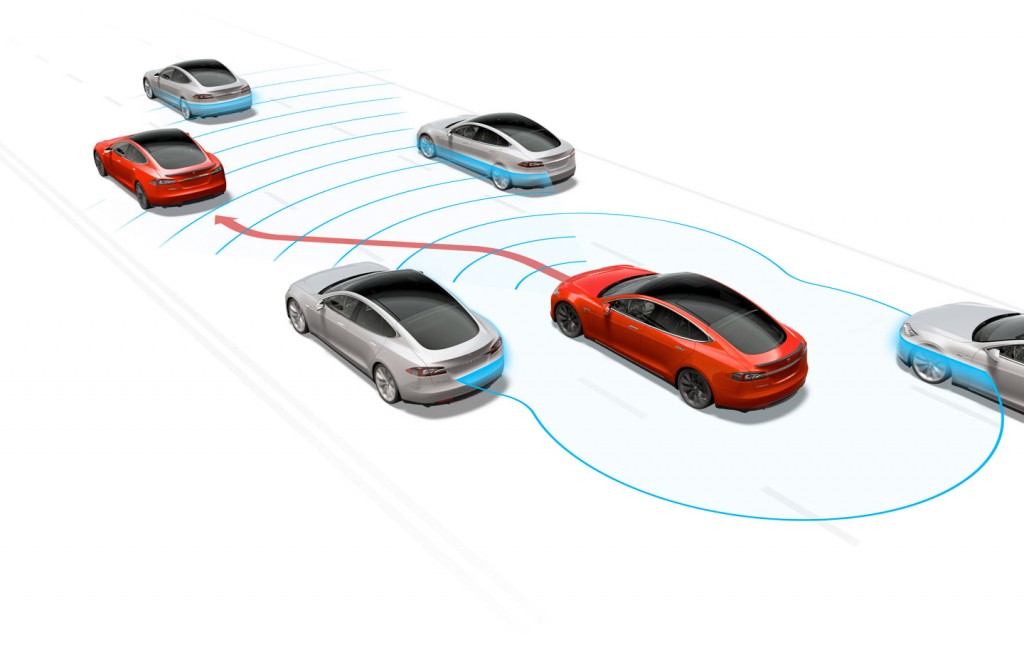

As a driver assistance function, Autopilot really does make driving safer and less stressful. But it has to be used as an aid, not a substitute for driver responsibility.

Other than gimmicks like "summon" (which brings the car a short distance to the driver), Autopilot is just adaptive cruise control plus very good automatic lane centering. That's it.

Those are great features to make a long highway slog less wearing and safer, because the car can react faster than a driver in many situations, but the Autopilot systems are not designed to account for cross traffic, merges, pedestrians, or any number of other situations any driver must be prepared to deal with.

Autopilot will keep the car in a lane and prevent it from banging into other vehicles also traveling in the same direction in that lane.

The Tesla community on the whole gets this. Of thousands of Tesla drivers I've communicated with, none think it's an "autonomous car."

Tesla Autopilot Test

Driving this truth home is the fact that the Autopilot system needs frequent intervention. When I crest a hill, approach a sharp curve, or drive on broken pavement or where the lines are worn, the system often demands attention.

It flashes a warning that I need to assume control—and if I don't immediately do so, it beeps to drive the point home.

When the unexpected happens (traffic ahead screeches to a stop, or another driver looks like they might cut me off, etc.), I often take over before the car reacts or asks me to intervene.

START HERE: NHTSA to investigate Tesla Model S Autopilot crash that killed driver

Because of the frequency with which I need to override the system, it would never occur to me to allow the car to operate without me paying attention, least of all at high speed. I can't imagine not being ready to assume control at any time.

Ceding attention when the Autopilot system has so many limitations would be like counting on a car with normal (non-adaptive) cruise control to slow down for traffic in front of it—an irrational expectation.

That said, I've thought a lot about this tragic accident. A man died driving the same car as me, using the same technology I use every day.

![2015 Tesla Model S 70D, Apr 2015 [photo: David Noland] 2015 Tesla Model S 70D, Apr 2015 [photo: David Noland]](https://images.hgmsites.net/lrg/2015-tesla-model-s-70d-apr-2015-photo-david-noland_100508387_l.jpg)

2015 Tesla Model S 70D, Apr 2015 [photo: David Noland]

Perhaps in Florida, where the accident occurred, where roads are straight, smooth, and flat, a driver could get lulled into inattention more than in Northern California, where the hilly topography and frequent curves require more frequent attention.

I come back to this: On the numbers, one death in 130,000,000 miles is beating the odds overall.

And we don't know the degree to which inattention played a role in this accident. One case in which a system didn't prevent an accident that it wasn't designed to prevent won't change my use of Autopilot. I'm a safer driver when the car has both my attention and that of computer monitoring systems.

WATCH THIS: Tesla Model 3: video of first ride in prototype $35k electric car

I speculate that a potential result of this accident would be that Tesla will force its drivers to keep their hands on the wheel for auto-steer to work—on the assumption that a driver with his hands on the wheel may be more likely to keep his eyes on the road. That's how most other carmakers now program their systems.

I wouldn't be thrilled about that, but if it saves inattentive drivers from their own lack of responsibility, I'd accept it.

And I'd pay $2,500 all over again for adaptive cruise control and hands-on-wheel lane centering as good as Tesla's.

![2015 Tesla Model S P85D, May 2015 [photo: George Parrott] 2015 Tesla Model S P85D, May 2015 [photo: George Parrott]](https://images.hgmsites.net/lrg/2015-tesla-model-s-p85d-may-2015-photo-george-parrott_100541722_l.jpg)

2015 Tesla Model S P85D, May 2015 [photo: George Parrott]

I hope Joshua Brown didn't die in vain. We may never know exactly what was going on in Josh’s car, but it seems possible that evasive driver action could have prevented or mitigated the severity of his crash.

I hope the attention his death has garnered allows consumers to understand the limitations of semi-autonomous driver aids better.

And I hope it leads to much greater awareness that, until fully autonomous cars are available (many years away, I'd guess), we need to pay attention behind the wheel.

Always.

_______________________________________